Content moderation is still primarily conducted by humans – here’s a game that helps you empathize

Charlotte Freihse, Felix Sieker

CONTENT WARNING: Descriptions of violence and hate

Meta, TikTok & Co couldn’t exist in their current form without tens of thousands of content moderators. As users, we rely on them daily to ensure that our digital spaces remain healthy. However, their work and the conditions in which they carry it out often go unnoticed or receive little attention. We argue that it is essential that we enhance awareness of the difficult job of content moderators – the mobile game “Moderator Mayhem” launched by Techdirt in May 2023 may help with that.

When scrolling through our newsfeeds on any social platform, we want to consume content without fear of being exposed to disturbing content such as hate speech, incitement to violence, or physical harm against people or animals – the list is long. The reason we can largely enjoy a comparatively safe online experience is due to the dedicated individuals who sort through stark graphic and disturbing content, removing illegal or prohibited content before it reaches the average user’s view.

Despite advances in artificial intelligence content moderation is mostly conducted by humans. Particularly for controversial topics, fully automated content moderation based on machine learning is not yet capable of fulfilling the task in a reliable way – algorithms developed to moderate content automatically are based on a set of rules that are not yet complex enough to deal with ambiguity. This implies that the effectiveness of automated content moderation is limited when making decisions that require more context-specific information. As a result, human content moderators are essential for the social media ecosystem but are not given the appropriate treatment. Instead, stories and reports of severe mental health traumas alongside employee rights violations have made headlines. The most recent one is the case of Meta’s company Sama facing a lawsuit over labor rights violations of content moderators in Kenya.

While official numbers are lacking, the evidence presented by individual content moderators is cause for concern. In an article in the financial times a content moderator who works as a contractor for Facebook in Kenya estimated that he had roughly 55 seconds to assess a Facebook video and has seen more than 1000 people being beheaded. The working conditions are not different at other tech platforms. A content moderator for TikTok in Morocco told Business Insider that she had only 10 seconds to review a TikTok video, which often contained similarly gruesome content. A content moderator, who works for Meta’s Telus in Essen, Germany, called Cengiz Haksöz speaks of 4,000 hours of violent material he had gone through during his five years on the job, leaving him with severe mental trauma – and no support from his employer.

Empathizing with content moderation: The Moderator Mayhem game

The numbers above serve as indicators of the poor working conditions under which individuals perform their job. However, it is challenging to convey the complexity of the job. The internet blog Techdirt launched a mobile game called Moderator Mayhem in May 2023. The objective of the game is to simulate the role of a content moderator. Players assume the position of a content moderator for a fictitious review site called TrustHive, which bears similarities to platforms like Yelp. The task involves moderating a diverse range of user-generated content, such as reviews covering various subjects such as products, events, venues, and more. The game focuses on non-violent imaging, as the most gruesome and traumatizing content is not depicted.

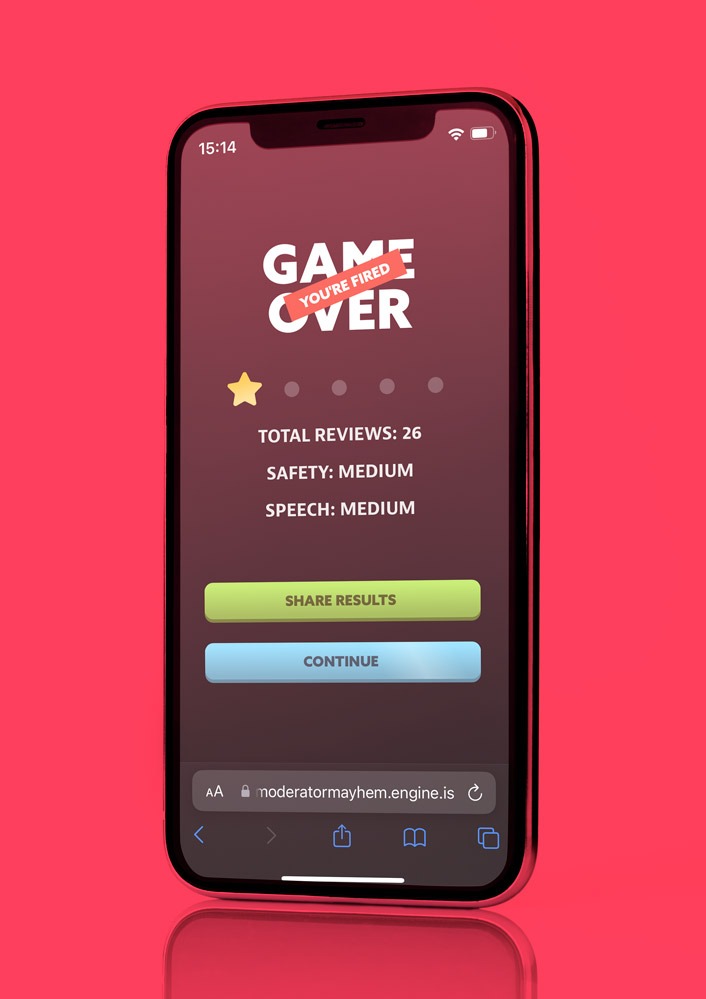

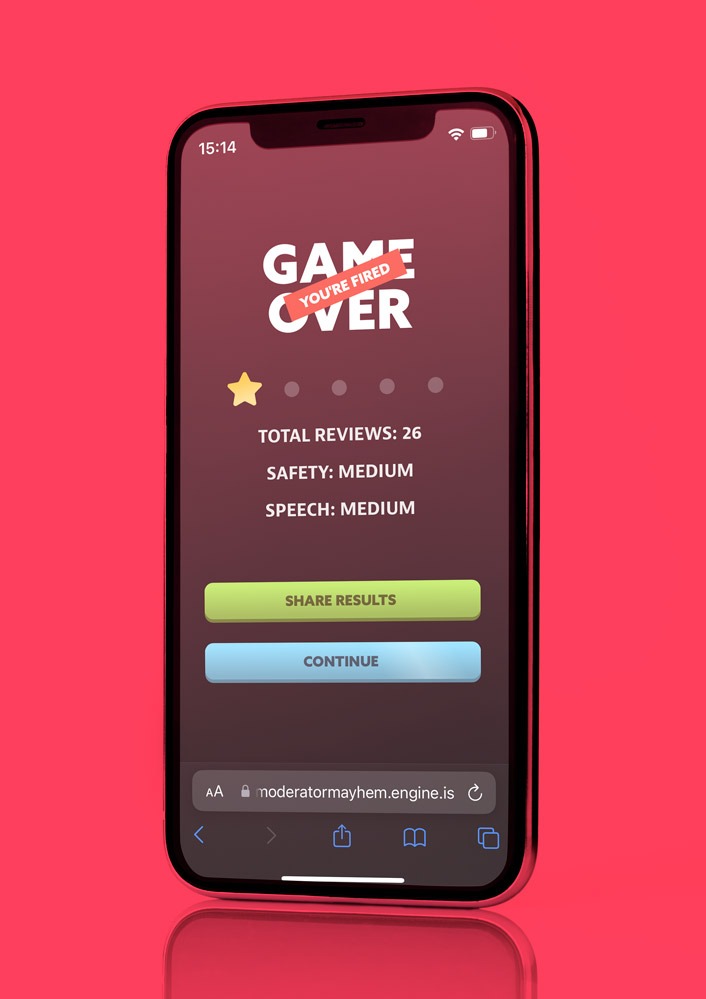

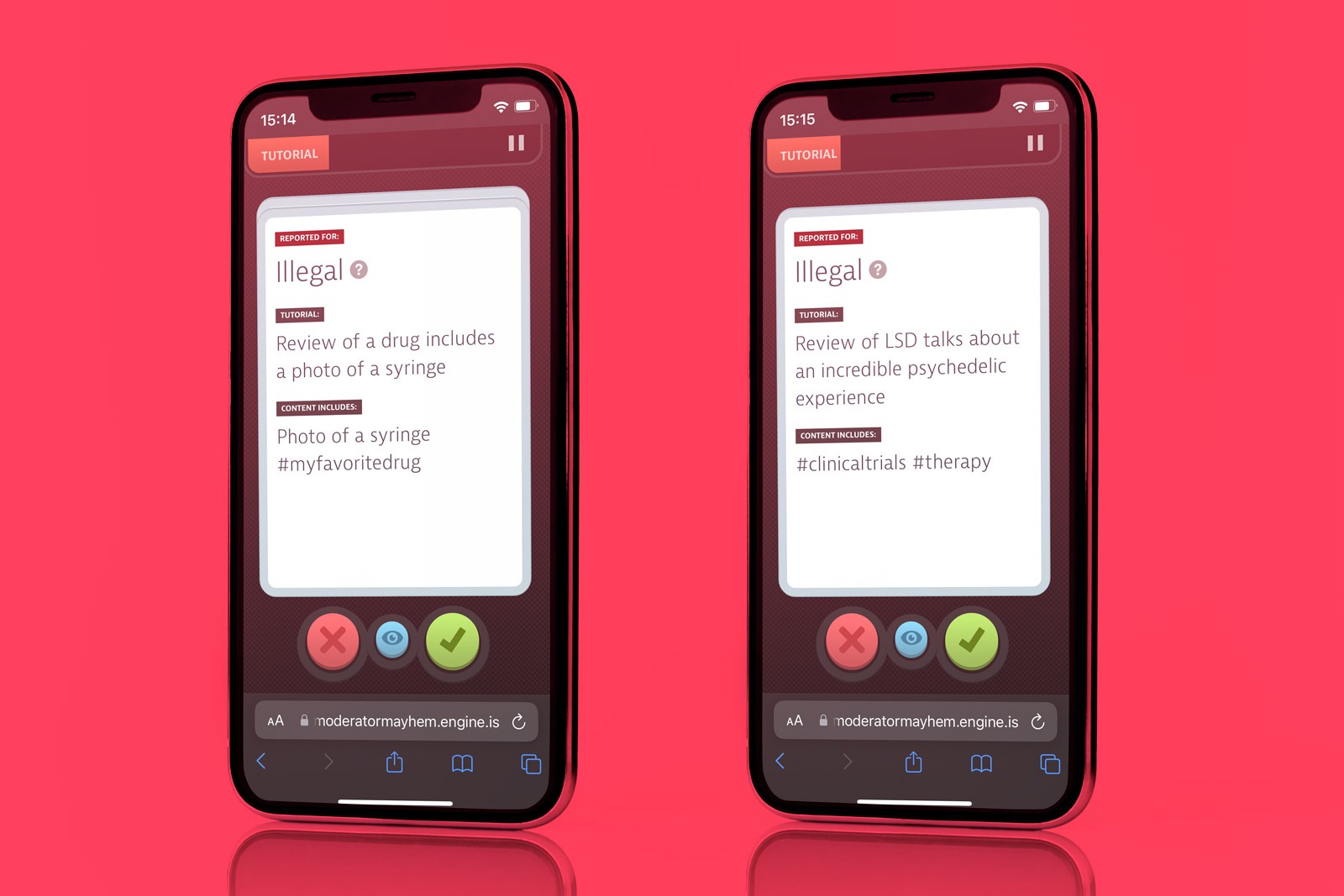

Content is reported under a specific label such as self-harm, bigotry or copyright. The task of the content moderator is then to review the reported content either by swiping right to keep the review up or swiping left to take it down. The game includes several rounds that allow the player only limited time to complete the task. Each new round includes more difficult edge cases coupled with appeals to previously taken reviews. After each round the player receives a rating by a fictional supervisor, which either leads to being promoted to the next round or being fired. The game also provides a help button that offers more context when the decision on how to proceed with a given review is more challenging. However, resorting to the help button costs valuable time. Thereby the game simulates the time pressures under which decisions must be made – exactly what content moderators are faced with in real life.

Key challenges for content moderators: time pressure, limited information, and edge cases

It is not only time pressure and incomplete information that makes content moderation a difficult task. A particular challenge is a variety of reported reviews where a straightforward answer seems difficult. These edge cases concern socially relevant and controversial topics. What these topics have in common is that they have no right or wrong answer but rather represent debates that are still evolving. One example the game provides is a review of LSD, which was reported under the label “illegal”. However, the review does not praise the drug’s recreational function but rather its therapeutical effects and includes the hashtags #clinicaltrials and #therapy.

Another example is a review of a 19th century painting that is labelled as nudity. Representations of nudity in art is in some countries subject to controversy. The challenge here is to balance free speech with the specific laws of a given country.

Both examples highlight the significance of context in the decision-making process of a content moderator.

Advocating for improvements instead of techno-solutionism in content moderation

The game thus provides a vivid illustration of a content moderator’s daily work. However, it also highlights a significant discrepancy between the importance of effective content moderation and the actual working conditions faced by professionals in this field. This demands greater attention and must be more prominently addressed in the ongoing discourse on content moderation. Rather than excessively praising the potential of fully automated content moderation, which may never truly materialize, the focus should be on enhancing the working conditions for those individuals who have been performing this role for years.

A collaborative manifesto, developed by various NGOs such as Superrr Lab and the German trade union verdi, in consultation with content moderators, outlines a number of essential demands: These include (1) substantial pay rises reflecting the demanding job requirements, (2) proper mental health care and (3) a demand for major social media platforms to cease outsourcing content moderation and instead to bring it in-house.

In conclusion, the focus of the debate should shift from idealizing technological solutions to championing improvements in the working conditions and well-being of content moderators. This would not only be beneficial for those involved in the profession but also contribute to a more responsible approach to content moderation in general.